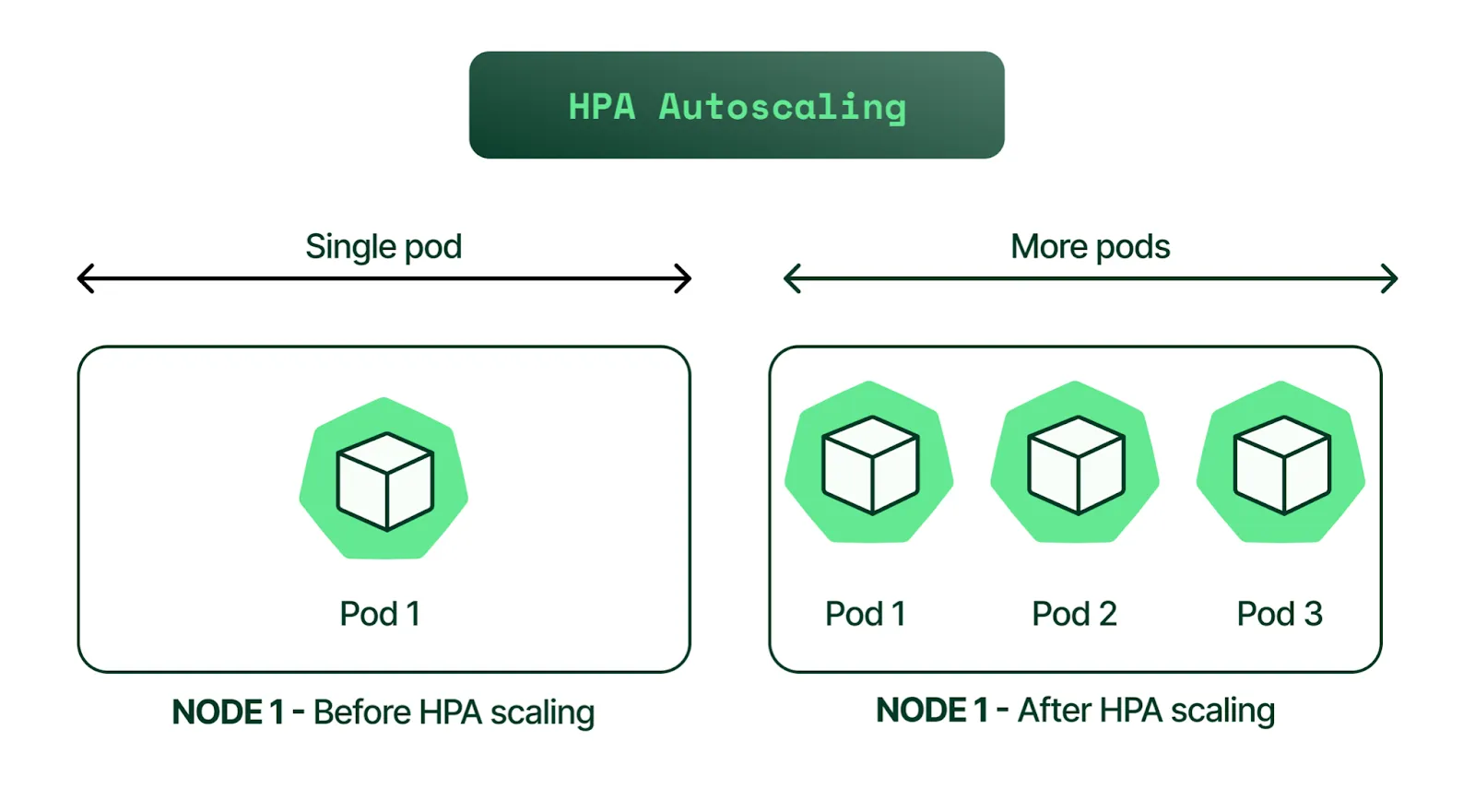

Horizontal Pod Autoscaling (HPA) is a Kubernetes feature that automatically scales the number of pods in a deployment based on resource utilization metrics. HPA is important for scaling deployments in Kubernetes because it allows you to ensure that your application has enough resources to handle increased traffic without overprovisioning and wasting resources.

To use HPA in Kubernetes, you need to have a Kubernetes cluster up and running. You also need to have a Deployment object defined in your Kubernetes manifest file. Additionally, you need to have a metrics server installed to collect resource utilization metrics from the pods in your deployment.

Horizontal Pod Autoscaling (HPA) works by monitoring the resource utilization of the pods in deployment and adjusting the number of replicas to maintain a desired level of resource utilization. There are two types of HPA: resource-based and custom metrics-based.

Resource-based HPA scales the number of replicas based on CPU or memory usage. Custom metrics-based HPA scales the number of replicas based on custom metrics such as request rate or response time.

Here’s an example of a resource-based HPA configuration for a Deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: example-deployment

labels:

app: example

spec:

replicas: 3

selector:

matchLabels:

app: example

template:

metadata:

labels:

app: example

spec:

containers:

- name: example-container

image: nginx

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 200m

memory: 256Mi

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: example-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: example-deployment

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

---

apiVersion: v1

kind: Service

metadata:

name: example-service

spec:

selector:

app: example

ports:

- name: http

port: 80

targetPort: 80

type: NodePort

This configuration sets up an HPA for a Deployment named “example-deployment” that scales based on CPU utilization, targeting an average utilization of 50%. The HPA will maintain a minimum of 1 replica and a maximum of 10 replicas.

To implement HPA in Kubernetes, you need to create a HorizontalPodAutoscaler object that references the Deployment you want to scale. You also need to specify the scaling metric and target utilization or value.

Here’s an example of creating an HPA object for a Deployment:

$ kubectl autoscale deployment example-deployment --cpu-percent=50 --min=1 --max=10This command creates an HPA object that scales the “example-deployment” Deployment based on CPU utilization, targeting an average utilization of 50%. The HPA will maintain a minimum of 1 replica and a maximum of 10 replicas.

Some best practices for using HPA in Kubernetes include setting appropriate resource limits for your pods, using resource requests to ensure that your pods have the resources they need, and monitoring performance with the right metrics.

Here’s an example of setting resource limits and requests for a pod in Kubernetes:

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: my-container

image: my-image

resources:

limits:

cpu: "1"

memory: "1Gi"

requests:

cpu: "0.5"

memory: "512Mi"In this example, we define a Pod named my-pod with a single container named my-container. We also set the resource limits and requests for the container using the resources field.

The limits the field specifies the maximum amount of CPU and memory that the container can use, while the requests field specifies the minimum amount of CPU and memory that the container requires.

In this example, we set a CPU limit of 1 core and a memory limit of 1 gigabyte (1Gi), and a CPU request of 0.5 core and a memory request of 512 megabytes (512Mi). These values can be adjusted based on the specific needs of your application.

In conclusion, Horizontal Pod Autoscaler (HPA) is a powerful feature in Kubernetes that allows you to dynamically scale your application based on its resource utilization. By using HPA, you can ensure that your application has the right amount of resources available to handle changes in traffic and usage patterns, while also reducing costs and improving performance.

In this article, we have covered the basics of HPA, including how it works and the benefits it provides. We have also discussed how to set up HPA for your Kubernetes cluster and how to configure it to scale your application based on CPU utilization.

By following the steps outlined in this article, you should now be able to implement HPA in your own Kubernetes deployments and take advantage of its many benefits. Whether you are running a small application or a large-scale distributed system, HPA can help you achieve greater efficiency, flexibility, and scalability in your deployment.